A search for a local sandwich shop turned into a near-miss device compromise. Here’s how Keep Aware kept it from going any further.

LLMs Change How We Search

Large language models (LLMs) like ChatGPT are changing how people search online. They summarize, suggest, and even serve up clickable links. But with that convenience comes risk, especially when users trust the output a little too quickly.

A recent attack shows just how easily that trust can be exploited.

It Started with Lunch

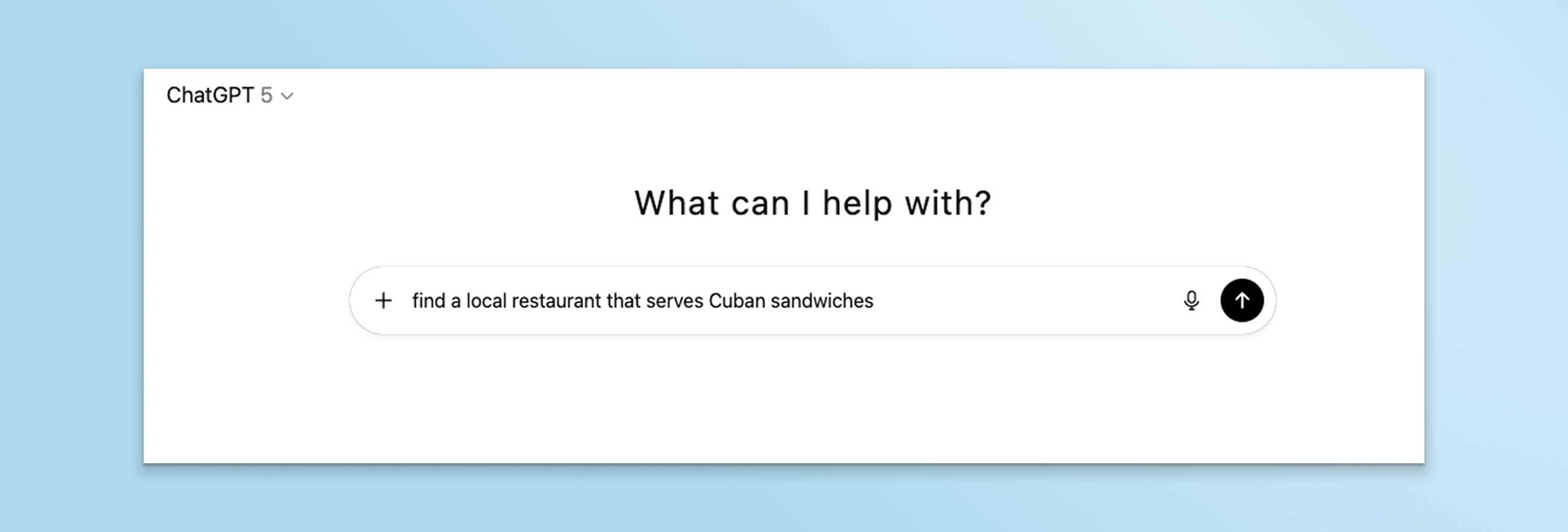

A user asked ChatGPT to find a local restaurant that serves certain sandwiches. Harmless enough. But when the chatbot returned results, one of the links opened a new browser tab to a young and risky site. The user trusted the LLM’s results and clicked that link.

But it didn’t stop there.

The Fake CAPTCHA Trap

That link, complete with restaurant search information in its URI query parameters, redirected the user to another domain—one already marked as malicious by a dozen threat engines in VirusTotal. The page pretended to check if the user was human, displaying a fake CAPTCHA. Behind the scenes, scripts were running base64 decoding, contained PowerShell references, used the JavaScript `exec` command, and manipulated the clipboard, common markers of a ClickFix attack.

The moment the user’s clipboard was populated with suspicious system commands, Keep Aware paused the session and prevented the user from pasting the malicious code into their device’s terminal, effectively stopping device compromise.

What is ClickFix?

ClickFix is a newer class of browser-based attacks. It dupes users into copying and pasting dangerous commands, often disguised with verification codes or as solutions to fake technical problems. The user is prompted to paste those commands into their device’s terminal, leading to system compromise, credential theft, data loss, and persistent malware.

These social engineering attacks thrive on user behavior. Before, they lured users to their malicious content through phishing emails, SEO poisoned search results, malvertisements, and compromised websites. Now, AI chatbots are an additional avenue serving end users links to malicious sites.

How Keep Aware Neutralized the Threat

In this case, Keep Aware’s browser security platform:

- Flagged both domains as risky due to their age and page characteristics.

- Blocked the user from interacting further once the ClickFix behavior was detected.

- Wiped the malicious content at the moment of clipboard copy to contain the threat.

No malicious command was pasted. No system code was executed. No device was breached. The user closed the tab and moved on—this time, using a simple Google search to find food.

Protect Users Who Use LLMs

LLMs like ChatGPT don’t seem to evaluate link safety. They don’t inform the user if a provided site was created last week to harvest credentials or if the site’s CAPTCHA is a front for clipboard hijacking. That’s where browser security matters most—catching threats where they happen, in real time.

As LLMs further integrate into businesses and AI-powered workflows become more common, we can’t rely on traditional security defenses alone to protect employees and business assets. Security has to move with the user. And increasingly, that means securing the browser.

IOCs

Domains:

tq[.]fdkox-d[.]onlineapi[.]meedoobestkeyplati[.]com

URLs:

tq[.]fdkox-d[.]online/filter